Think of teaching a robot to recognize your face or to pick that show on Netflix that you would probably find most interesting to watch next. Exactly how is that supposed to happen? This is where neural networks come into play. In a way, it can be thought of: they are the brains behind most of the intelligent technologies that fill up our everyday lives. These networks learn from examples, like we do to recognize patterns in the world.

When I first learned about neural networks, I imagined that small brains inside a computer were forming connections and working with information, just like our neurons do. In which case, it is not far from the truth, and neural networks try to imitate the way our brains work in order to get a machine to make a decision, recognize images, or even come up with ideas. Hello everyone. I am here currently to present to you some introductory features, aspects, and the importance of neural networks in today’s technology. At the end of this post, you will understand why these networks are important and the unsung heroes behind many things taken for granted daily.

The Basics of Neural Networks

What is a Neural Network?

Okay then, now to the basics. The neural network is nothing but a very big special kind of solver puzzle. Instead of trying to figure out which pieces are lost, something stands out about how all the pieces of some problem are put together. Imagine if it was a group project, and everyone had their bit (or neuron). A single man can’t do the whole puzzle, but when they come together, even the hardest of challenges can be tackled.

Neural networks achieve this by imitating how our brains currently process information. For example, you are learning to ride a bicycle now. First, you wobble and fall, but after some time, your brain figures out how to keep balanced while steering and pedaling—those 3 complex things all at once. Similarly, neural networks learn from mistakes and get better over time. They process information through layers of neurons which each will handle a chunk of the problem, like finding the color of an image or the tone of a voice.

Key Components of Neural Networks

Let’s break it down into simpler pieces.

The three main parts of a neural network:

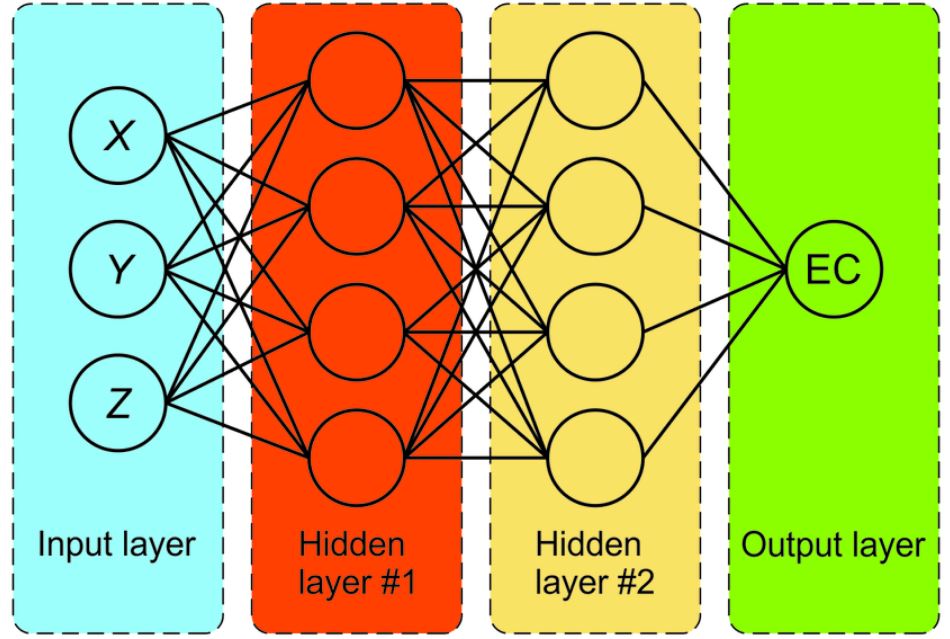

- Input Layer: This would be the layer where the data inputs to. It’s sort of like feeding a photo into a network to give each tiny unit of the image a neuron in this layer.

- Hidden layers: these are the “thinkers”—the layers in which processing of the input data takes place, whereby patterns are searched for. For instance, if the network tried to recognize that there is a cat in the photo, then its hidden layers could search for the edges, other shapes like ears and whiskers, and in the end, match everything together in order to identify a cat.

- Output Layer: This is where you make your decision. After all the processing, this layer might say, “Yep, that’s a cat!” or “Nope, that’s a dog.”

How Neural Networks Work

Here’s a fun way to think about how neural networks work. Imagine you are in the middle of a game of 20 Questions. You ask simple questions demanding quick numerical facts to figure out what object a person has in mind. The network kind of does a rather similar thing to that: it sees all of the data, with the “questions” in their numerical form; processes them through its layers, thinking on each answer; and finally it decides on something.

For example, if it were an animal, the input layer may ask, “Does it have fur?” Then the ‘hidden’ part of the algorithm may continue with further questions: “Does it purr?” or “Does it have claws?” To finally guess, “It’s a cat!” The complex business is fractured into simple steps by the linearized function and hence can be delegated, or automated as one may say.

Types of Neural Networks

Feedforward Neural Networks (FNNs)

Now that we have already discussed the history of neural networks, we can finally talk about the most simple kind of neural networks, which is the Feedforward Neural Networks—shortened to FNNs. Let’s simply consider this network like a one-way street. Information will only flow in one direction: from the input layer moving through the hidden layers, and right to the output layer. Just like when you’re walking, you never look back; it’s just going forward.

FNNs are brilliant at simple tasks like deciding whether or not an email is spam. They input the various features of the email through the network, perhaps taking the words in the email and plugging them into the network to result in some conclusion. However, since they don’t have memory for past inputs, it will not turn out well on tasks that have information order relevance, such as understanding a sentence.

Convolutional Neural Networks (CNNs)

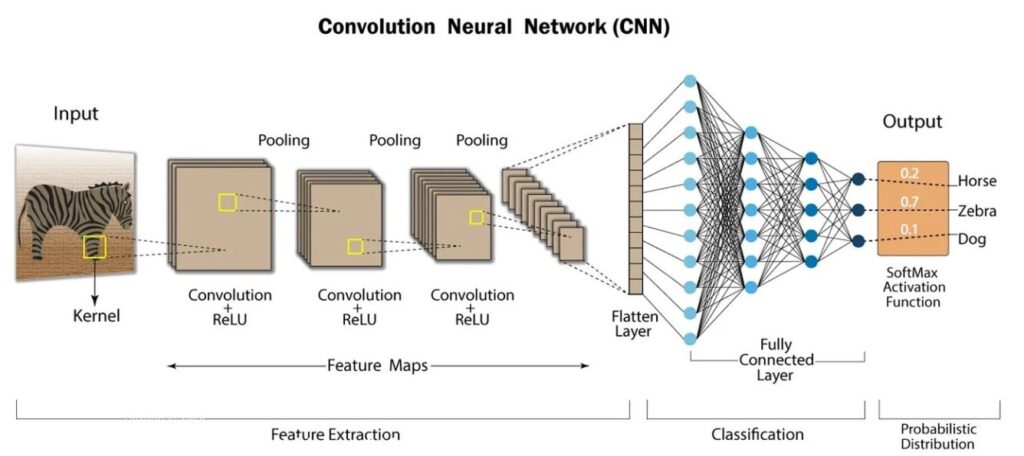

Then we look at the Convolutional Neural Network (CNN) architecture, which acts somewhat like a detective in the neural network world and analyzes images. Think about it in this way: when you would play a spot-the-difference game, you would have two images and you would put them side by side to identify the differences. CNNs sort of do the same thing; they scan over images to find important features.

Suppose we have a CNN trying to identify a dog in an image. It should start looking at edges and texture, e.g. the fur or the structure of its ears. As it moves through the layers, all this comes together to recognize a dog. CNNs are the reason why the face recognition feature in your phone works and why apps can filter images so precisely.

Recurrent Neural Networks (RNN)

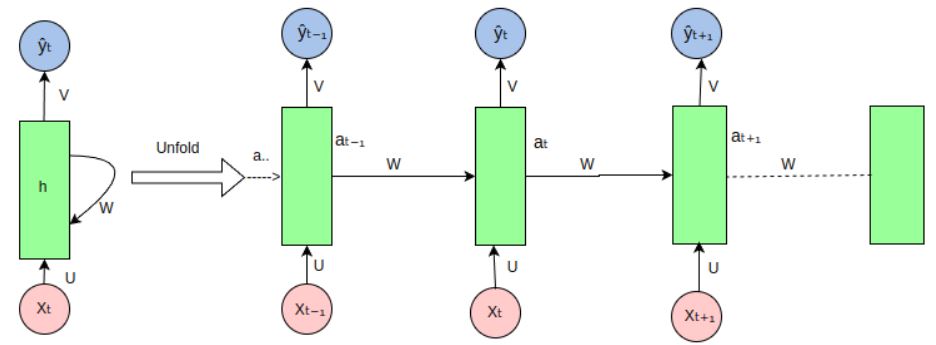

Recurrent Neural Networks (RNNs) are the essence of a good storyteller. They inquire about history—one that does not reside solely in the present. Imagine trying to get through a story, accessing only one sentence at a time, not knowing what was said in the previous one. That wouldn’t make much sense, would it? RNNs resolve this by maintaining a “memory” of previous data, making them rather ideal for jobs like translating languages or text generation.

For instance, when you use the autocomplete feature of your phone, it will be an RNN working on it. It will have a memory of the things you have previously typed and predict what you are most likely to type next. Memory helps RNNs to excel in the processing of sequences of data, such as inference about a sentence or guesses about the next word in song lyrics.

Other Advanced Networks

But above and beyond them, there exist even more advanced networks, such as Generative Adversarial Networks and Transformer Networks. GANs are like artists. Suppose, in a twist of possibility, they can create new content, maybe depicting a very realistic image of a person who never even existed. Now, imagine one type of art competition in which one artist is trying to produce a realistic fake, and the other is attempting to pick the fake. That’s essentially how GANs work.

Transformers are AI’s Swiss Army knives that crunch through huge datasets and are crucial for modern applications such as language models, enabling the AI to write an essay or even chat with you. If you’ve ever wondered at some remarkable AI text and how realistic it sounds, then you have transformers to thank.

Training for Neural Networks

Training Process

It’s sort of like training a neural network is kind of like teaching a puppy new tricks. At the beginning, the latter does not know what you require of it. But, with practice and a few treats (or data, in our case), he will begin to catch on. In much the same way, neural networks learn by adjusting their internal settings, or weights and biases, based on the examples they see.

Suppose you are training a neural network to recognize handwritten numbers. You show it thousands of examples, and slowly the network starts to figure out what makes a “2” different from a “3.” There is a lot of ad-hoc used along the way: a network will make a prediction, check if it is right, and then tweak its settings to do a little better next time.

Optimization Techniques

We’re considering the use of optimization techniques, which ensure better learning by the network: fancy methods to help the network be better and learn faster. Make an analogy with those “life hacks” you get for studying in your brain. For instance, one of these is like breaking a big task into smaller, more manageable, easier steps to keep you from getting overwhelmed. This one will help the network improve step by step.

Another technique is Regularization, which is a lot like making sure one does not over-study one topic at the expense of another. It ensures that the network does not get too focused on one topic, which will enable it to generalize better. This is very important, as it prevents the network from becoming so good at the training examples and failing when new data is shown.

Challenges in Training

But training a neural network is by no means always smooth. One common challenge is overfitting, where the network is just trying too hard to remember the training data and then fails to generalize to new examples. It’s like memorizing answers for a test instead of really grasping the material: you might do well on practice tests, but bomb the real one.

Another one is the vanishing gradient problem, much as being in a rut when trying to learn something new. The network slows in progress, and it becomes harder to make any improvement. To cut through this, researchers have come up with tricks like using different kinds of activation functions or adding shortcuts in the network to keep things moving.

Applications of Neural Networks

Industry Applications

Neural networks are everywhere, making our life easier in ways we might not even notice. In health care, they run medical images through an analysis that helps doctors see diseases well in advance. Imagine a too-smart assistant looking over your shoulder when you look at an X-ray, pointing out things you might have missed. This is how neural networks help doctors derive faster and more accurate diagnoses.

In finance, for instance, such networks serve as detectives in sniffing out fraud. From analyzing transaction patterns to otherwise, these networks will know if something is fishy and warn the bank to take action before harm is done. And if you have ever wondered how Netflix always seems to know what you want to watch next, that’s the neural network in your TV learning your habits and suggesting shows that fit your taste.

Emerging Fields

Neural networks can travel outside the parameter of the industry and also develop in other areas. Take autonomous vehicles, for example. These cars are neural network-powered; that is, they “see” the road, traffic signs, and are even capable of accident-free operation. It is like giving the car its own set of eyes and a brain to process information on what it sees.

With art, neural networks stand in the gap as the creative partner. In the league of tools that interact with GANs, it is possible to whip up new styles or mash different genres together to form something altogether new and full of originality. Think about working with the AI that creates a picture, part Van Gogh and part Picasso, with you. The sky is limit as it opens up new avenues for creativity.

Everyday Examples

Even in our daily activities, neural networks are in operation in the background. If you have ever used Siri or Google Assistant, you have used a neural network. These virtual assistants use networks of neurons to decipher your instructions and try to respond appropriately, be it for the weather or to set a reminder.

These are the same neural networks that social media uses to keep you attached obsessively. They are scanning for what you like, comment, and share. And then, provide you with it in an infinitely large amount. This is the reason why most people end up spending more time than they want to on either Instagram or TikTok. It has been designed to keep you occupied by giving you exactly what you want to see.

The Future of Neural Networks

Current Trends

Neural networks have seen fast evolution; a new development is reported to take place almost daily. Today, progress is greatest in deep learning—placing very large, multi-layer neural networks. In other words, these networks are in themselves deep thinkers. That is the reason why, at present, artificial intelligence can outperform world champions in games like chess and Go or even write articles, which seem surprisingly human.

Another exciting trend is the combination of neural networks with other AI techniques. Mixing and matching different approaches, researchers are creating even more powerful systems. Think about it as composing a team of experts—every one of them specializes in something different, and jointly they are able to solve almost any kind of problem.

Ethical Considerations

With great power comes great responsibility, as they say. The corollary is that the more these neural networks become part of our live projects, the more responsible usage comes to mind. But one great concern is the presence of bias. If a neural network is trained on data that has bias, it might make decisions that are unfair in the manner of favoring one subgroup of people against another. It’s like teaching a robot by only giving half the story; it’s never going to give you a fair outcome.

Privacy is another matter. Most of these networks are dealing with vast amounts of intensely personal data. The line between helpful and creepy is a thin one. Great care needs to be taken on how this data is being handled, so people’s information is protected and not exploited.

The Road Ahead

Well, indeed it’s a really promising perspective for us together with the future of neural networks. One day, we might have networks that replicate the intelligence of humans and perform all of the tasks we do. It is a long way off, in the distance, and is called artificial general intelligence—or AGI for short—but it is something that keeps researchers in the field motivated.

Another area that could be developed includes that of neuromorphic computing, in which computers are are to be made to work more like our brains do. All this can become much faster and more energy-efficient, thus enabling new applications that we do not even imagine yet. It will be like moving up from a bicycle to a rocketship, but we have no idea where it could take us next.

Final Thoughts

Neural networks are somewhat like the unseen engines powering most of the technology in operation today. They have been quite integrated into our world: from enabling doctors to save lives to making social media feeds addictive. But as we continue to develop and depend on these networks, it’s also important that we consider its ethical implications and make sure it’s used for the good.

There is a bright future for neural networks, presenting whole new worlds which could be dreamed of and explored. Whether we are just on the brink of building machines as smart as humans or just scratch the surface on what can be done with these networks, there is one thing that is clear to any observer: they are here to stay, and their influence can only increase. The next time you use your phone, stream a show, or talk to a virtual assistant, remember the neural networks working behind the scenes to make all that possible.