If you’re serious about turning raw data into forward‑looking decisions instead of backward‑looking reports, you need more than spreadsheets and basic BI charts. You need a predictive analytics platform: a place where your data flows in, models get built, tested, deployed, monitored—and business value flows out. Sounds neat. Reality? The market is crowded; buzzwords (AutoML, MLOps, lakehouse, generative AI) overlap; and every vendor claims “enterprise‑grade.”

In this guide I walk you through seven strong platforms you’ll actually see shortlisted inside mid‑market and large enterprises: IBM Watson Studio, SAS Viya, Microsoft Azure Machine Learning, Amazon SageMaker, Google Vertex AI, DataRobot, and RapidMiner. I’ll explain what each does well, where friction appears, and how to map them to your real needs. You’ll also get a comparison snapshot, selection criteria, scenario matching, an adoption checklist, an ROI framing, future trends, and FAQs you can pass to your leadership team.

By the end, you can confidently say: “Here’s our shortlist and here’s why,” instead of “We picked this because Gartner’s quadrant looked nice.” Let’s dive in.

What Is a Predictive Analytics Platform

A predictive analytics platform is software (cloud, on‑prem, or hybrid) that lets you take historical and real‑time data, build statistical or machine learning models, and generate predictions—like “Which customers will churn next month?” or “How many units will we need next week?”

It is not just a dashboard tool. A solid platform covers:

- Data connectivity (databases, files, APIs, streams)

- Preparation & feature engineering (cleaning, joining, deriving variables)

- Model development (code notebooks and/or low‑code visual flows, AutoML)

- Validation & experiment tracking (metrics, version history)

- Deployment (batch scoring, real‑time endpoints, scheduled jobs)

- Monitoring (performance drift, data quality, fairness)

- Governance & security (role‑based access, lineage, audit trails)

Enterprises also care about integration with existing stacks (data lakes, warehouses), compliance, and the ability to scale across multiple teams—not just one rockstar data scientist. Predictive platforms increasingly blend with MLOps tooling so you don’t have to duct‑tape ten separate products.

Key Evaluation Criteria (How You Should Judge)

Before you look at vendor logos, align on criteria. Score each (1–5) relative to your priorities, not a generic checklist.

- Data Connectivity & Integration – Does it connect natively to your cloud storage (S3, ADLS, GCS), warehouses (Snowflake, BigQuery, Redshift, Synapse), on‑prem databases, streaming (Kafka, Kinesis)? Fewer DIY connectors = faster time to first model.

- Model Development Experience – Do your teams prefer Python/R code notebooks, or do you need visual/drag‑and‑drop for analysts? How good is AutoML when you want quick baselines?

- Scalability & Performance – Can training jobs scale horizontally? Can you leverage distributed frameworks (Spark, Ray) or managed compute clusters without manual tuning?

- MLOps & Lifecycle Management – Built‑in experiment tracking, model registry, versioning, CI/CD hooks, rollback, end‑to‑end lineage. This shrinks “model stuck in lab” risk.

- Governance, Security & Compliance – SSO, role‑based policies, encryption at rest/in transit, audit logs, data masking. Critical for regulated industries.

- Explainability & Responsible AI – Feature importance, SHAP/LIME style insights, bias detection, fairness metrics. Execs and regulators ask “Why?” not just “How accurate?”

- Collaboration & Productivity – Shared projects, reusable feature stores, commenting, templates. Less friction across data engineers, data scientists, ML engineers, analysts.

- Deployment Flexibility – Batch jobs, REST/GRPC endpoints, streaming, edge options, hybrid or multi‑cloud support. Avoid being boxed into one runtime model.

- Pricing & Total Cost of Ownership (TCO) – Transparent or “call us”? Consumption vs. seat licenses vs. bundles. Consider hidden infra spend (compute, storage, network egress) plus people costs.

- Support & Ecosystem – Official SDKs, marketplace of pre‑built models, partner network, community Q&A, training programs. You’ll need help eventually.

Tip: Weight each criterion (e.g., Security 20%, AutoML 10%, Deployment 15%, etc.) and produce a weighted score. That simple exercise prevents politics from driving the decision.

Quick Comparison Snapshot

| Platform | Ideal For | Deployment Models | Strength Highlights | Typical Limitation | Pricing Style |

|---|---|---|---|---|---|

| IBM Watson Studio | Regulated, hybrid enterprises | Cloud + on‑prem via Cloud Pak | Governance, SPSS flows + notebooks, AutoAI | Can feel heavy/complex | Subscription / enterprise agreements |

| SAS Viya | Mature analytics shops needing statistical depth | Cloud & containerized | Rich analytics legacy + modern cloud stack | Licensing perception & cost | Enterprise licensing |

| Azure Machine Learning | Microsoft‑centric orgs | Azure cloud | Integrated with Azure data & DevOps ecosystem | Best inside Azure; multi‑cloud less smooth | Pay‑as‑you‑go + reserved |

| Amazon SageMaker | AWS‑native scalable workloads | AWS cloud | Breadth of ML services & deployment options | Many components = learning curve | Consumption (instances/features) |

| Google Vertex AI | Data/AI convergence, BigQuery users | GCP cloud | Unified tooling, strong AutoML + pipelines | GCP‑centric; migration needed | Consumption |

| DataRobot | Fast value & governed AutoML | Cloud / hybrid | Speed to model + explainability | Deep custom code less flexible | Subscription tiers |

| RapidMiner | Citizen data scientists + mixed skill teams | Cloud / on‑prem | Visual workflows + extensions + governance | Very advanced custom ML may outgrow | Subscription / tiered |

Use this as orientation—not a final verdict.

The 7 Predictive Analytics Platforms (Deep Dive)

1. IBM Watson Studio

Snapshot: IBM Watson Studio (often bundled in IBM’s broader data & AI suite) blends code notebooks, SPSS Modeler drag‑and‑drop flows, AutoAI, and governance features aimed at enterprises with hybrid or regulated environments.

Standout Features: AutoAI (automatic feature engineering and model selection), SPSS Modeler for non‑coders, integrated data catalogs, model risk management hooks, strong lineage/audit, deployment through Watson Machine Learning.

Enterprise Strengths: Mature governance and policy controls suit financial services, healthcare, government. SPSS flows help legacy analytics teams transition toward modern ML without alienating business analysts. Integration with IBM Cloud Pak for Data enables containerized on‑prem or OpenShift deployments.

Potential Drawbacks: Interface breadth can feel overwhelming. Pricing/licensing can be complex, especially if you only need a subset. Pure‑cloud‑native startups may find it heavier than needed.

Best For: You if compliance, auditability, and hybrid flexibility rank top.

Common Use Cases: Risk scoring, fraud detection, churn prediction, supply chain planning.

Pricing Angle: Package/subscription; cost clarity improves after scoping user roles + compute.

2. SAS Viya

Snapshot: SAS modernized its long‑standing analytics powerhouse into Viya—a cloud‑native, containerized platform offering advanced statistics, ML, decisioning, and data management with a browser interface and open language support (Python integration).

Standout Features: Deep statistical and forecasting procedures, optimization, integrated governance, model management, visual pipelines, decision flows, and support for open source (Jupyter integration, PROC PYTHON).

Enterprise Strengths: Trusted accuracy and validation in regulated settings; leadership comfortable with SAS output. Smooth path for teams with existing SAS codebase to containerized cloud operations. Strong support & training assets.

Potential Drawbacks: Perception of higher licensing cost vs. open source stacks. Some newer data science hires may prefer pure open source toolchains.

Best For: Organizations already invested in SAS or requiring robust validated statistical methods (insurance reserving, clinical, credit risk) plus modernization.

Common Use Cases: Demand forecasting, actuarial models, credit scoring, optimization scenarios.

Pricing Angle: Enterprise licensing; ROI hinges on consolidating multiple legacy tools into Viya.

3. Microsoft Azure Machine Learning (Azure ML)

Snapshot: Azure ML integrates with the broader Azure data estate—Synapse, Data Factory, Databricks (if chosen), and Azure DevOps—to offer a flexible environment for code‑first data scientists and AutoML users.

Standout Features: Managed compute clusters, ML pipelines, AutoML, responsible AI dashboards (explainability, fairness, error analysis), model registry, integration with GitHub and Azure DevOps, prompt‑flow style features emerging around generative AI add‑ons.

Enterprise Strengths: Seamless identity (Azure AD), networking (VNets, private endpoints), security, and cost management if you already run on Azure. Good path from experimentation to production with endpoints, batch, and managed online inference.

Potential Drawbacks: If you are multi‑cloud, deep tie‑ins may reduce portability. The breadth of Azure services means governance can sprawl without clear architecture guidelines.

Best For: Microsoft‑centric enterprises wanting end‑to‑end integration and IT alignment.

Common Use Cases: Predictive maintenance (IoT + Time Series), customer lifetime value, anomaly detection, demand planning.

Pricing Angle: Pay‑as‑you‑go for compute, storage, managed endpoints + some advanced features; control costs with autoscaling and spot instances.

4. Amazon SageMaker

Snapshot: SageMaker offers a modular “toolbox” approach: notebooks, processing jobs, training jobs, hyperparameter tuning, model registry, real‑time & batch inference, feature store, pipelines, model monitor, plus industry‑specific add‑ons.

Standout Features: Broad integrated services (Feature Store, Clarify for bias/explainability, Data Wrangler for prep, Autopilot for AutoML, JumpStart model zoo), flexible deployment (real‑time, serverless, edge with Neo).

Enterprise Strengths: Elastic scaling, fine‑grained IAM control, huge ecosystem of AWS data services (Glue, Redshift, EMR, Kinesis). If you’re already “all‑in” on AWS, security/network patterns are consistent.

Potential Drawbacks: Menu complexity can slow newcomers. Costs can creep if idle instances stay running or experiments are not tagged and cleaned. Opinionated toward AWS—multi‑cloud portability requires containerization discipline.

Best For: Cloud‑savvy teams building many models at scale, needing performance tuning and fine cost control.

Common Use Cases: Personalization, fraud detection, forecasting (with DeepAR), computer vision, large‑scale batch propensity scoring.

Pricing Angle: Granular consumption—great if you enforce lifecycle policies; risky if governance is loose.

5. Google Vertex AI

Snapshot: Vertex AI unifies data prep, notebooks, AutoML, custom training, pipelines (Vertex Pipelines), feature store, model registry, and monitoring on top of Google Cloud infrastructure. It stresses integration with BigQuery and a simplified end‑to‑end ML experience.

Standout Features: Strong AutoML (tabular, vision, NLP, forecasting), integration with BigQuery ML, embedded explainability, pipelines built on Kubeflow under the hood, unified APIs, and growing generative AI toolset.

Enterprise Strengths: If your analytics gravity already lives in BigQuery, friction drops dramatically—train directly on warehouse data without large exports. Scales smoothly for large datasets. Simple pricing on many managed pieces vs. DIY cluster wrangling.

Potential Drawbacks: Primarily attractive if you embrace GCP; migrating large data estates from other clouds can be non‑trivial. Some advanced customization still pushes you to underlying infrastructure (GKE).

Best For: Data teams consolidating analytics + ML in BigQuery, or those wanting fast AutoML prototypes that can graduate to custom training.

Common Use Cases: Marketing mix modeling, demand forecasting, call center analytics (NLP), computer vision, recommendation engines.

Pricing Angle: Consumption (training hours, prediction, storage) + BigQuery costs; watch cross‑region egress.

6. DataRobot

Snapshot: DataRobot positions as an “AI lifecycle platform” with a strong heritage in AutoML plus MLOps, governance, and explainability—designed to accelerate delivery of predictive models for both data scientists and business analysts.

Standout Features: Automated feature engineering, model ranking/leaderboards, built‑in explainability (SHAP‑style insights), compliance documentation generation, champion/challenger management, monitoring dashboards, guardrails for bias and drift, time series automation.

Enterprise Strengths: Speed to first ROI; helpful when you have limited senior ML engineering bandwidth. Governance artifacts (model factsheets) help with internal risk committees. Consistent interface reduces context switching.

Potential Drawbacks: Power users wanting deep custom algorithm tweaks may feel constrained vs. fully code‑centric stacks. Cost justification must show value vs. assembling open source + cloud primitives yourself.

Best For: Enterprises wanting to empower a mixed skill team (analysts + DS) and produce auditable models quickly.

Common Use Cases: Churn, credit risk, marketing propensity, forecasting, claims triage.

Pricing Angle: Tiered subscription (seats + usage). ROI often demonstrated via rapid pilot wins.

7. RapidMiner

Snapshot: RapidMiner emphasizes a visual workflow designer plus extensions, with options for AutoML, code scripting, and governance controls. It appeals to organizations nurturing “citizen data scientists” who still need oversight.

Standout Features: Drag‑and‑drop operators, integrated data prep, Auto Model, explainability panels, extension marketplace, and on‑prem/hybrid flexibility.

Enterprise Strengths: Low barrier for analysts to build prototypes; consistent interface speeds learning. Governance features (project controls, versioning) reduce “wild west” Visual ETL chaos.

Potential Drawbacks: Extremely advanced custom deep learning or large‑scale distributed training may push teams to supplement with native cloud ML services. Ensuring performance on huge datasets can require architectural planning.

Best For: Mixed teams where you want to elevate power Excel/BI users into predictive modeling safely while still giving data scientists space.

Common Use Cases: Customer segmentation, churn, upsell, quality inspection, basic forecasting.

Pricing Angle: Tiered subscription; attractive if you derive value from broad adoption beyond a small DS team.

Matching Platforms to Enterprise Scenarios

| Scenario | Top Priorities | Recommended Platform(s) | Why |

|---|---|---|---|

| Highly Regulated (Banking/Healthcare) | Governance, audit, explainability | IBM Watson Studio, SAS Viya, DataRobot | Strong lineage, documentation, risk controls |

| Cloud‑Native DevOps Mature | CI/CD integration, infra automation | SageMaker, Azure ML, Vertex AI | Deep API + pipeline tooling |

| Hybrid / Multi‑Cloud | Portability, containerization | IBM (Cloud Pak), SAS Viya, DataRobot (hybrid), RapidMiner (on‑prem option) | Flex deployment patterns |

| Citizen Data Scientist Enablement | Low‑code + guardrails | RapidMiner, DataRobot, Watson Studio (SPSS) | Visual flows + governance |

| High‑Volume Real‑Time Predictions | Elastic endpoints, monitoring | SageMaker, Vertex AI, Azure ML | Scalable managed inference |

| Fast Time‑to‑Value Pilot | AutoML strength, templates | DataRobot, Vertex AI, RapidMiner | Quick baseline models |

| Deep Statistical / Advanced Forecasting | Rich analytics procedures | SAS Viya, IBM, DataRobot (time series) | Mature forecasting toolkits |

How to Shortlist: Take your top three scenarios, map them to 2–3 platforms each, gather weighted scores, then run a single pilot per finalist—not seven parallel experiments that burn time.

Implementation & Adoption Checklist

- Clarify Value Use Cases: Start with a business question (“Reduce monthly churn by 3%,” “Cut inventory days by 5”). Tie a KPI to each.

- Data Readiness Audit: List required data sources, assess quality (completeness, latency), and define minimal viable dataset. Bad data kills predictive ROI.

- Skill Inventory: Who will own data engineering, modeling, MLOps, and domain interpretation? Identify gaps and plan targeted training (not just generic ML courses).

- Select Pilot Use Case: Pick something high business impact and feasible within 6–8 weeks (not your messiest data swamp).

- Establish MLOps Baseline: Version control (Git), environment reproducibility, experiment tracking, model registry. Even simple conventions early save rework.

- Governance & Responsible AI: Define approval workflow, drift thresholds, retraining triggers, bias checks, and documentation templates before deployment frenzy.

- Deploy & Monitor: Push a model to production in limited scope (one segment or region) to gather performance + adoption feedback.

- Feedback Loop: Collect end‑user (analyst, sales rep, planner) feedback on prediction usefulness. Iterate features, thresholds.

- Scale Portfolio: As wins accrue, build a central model inventory dashboard: owner, business KPI, last retrain date, current performance.

- Change Management: Communicate how predictions support—not replace—human decisions. Provide quick start guides and mini training sessions.

Common Pitfalls: Tool sprawl (“every team picks their own toy”), ignoring data quality, skipping monitoring (silent model decay), underestimating cultural shift, no clear success metric.

Calculating ROI of Predictive Analytics

You justify platform spend by incremental impact, not model counts.

Value Drivers:

- Revenue Lift: Personalized offers, better cross‑sell, higher retention.

- Cost Reduction: Optimized inventory, reduced waste, automated decisions.

- Risk Mitigation: Fraud avoidance, credit risk accuracy, compliance alerts.

- Productivity: Faster analyst cycle times, fewer manual estimates, engineer efficiency.

Cost Components:

- Licensing/subscription fees (platform + add‑ons)

- Cloud/infra (compute hours, storage, network)

- Talent (data engineers, data scientists, MLOps engineers, training)

- Integration & change management (process redesign)

- Ongoing monitoring & retraining (operational overhead)

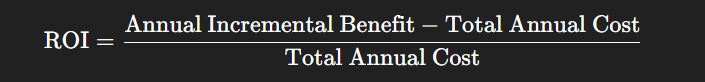

Simple ROI Formula:

Example Frame: If churn reduction adds $2M retained margin and total annual platform + team incremental cost is $800K, ROI = (2,000,000 – 800,000) / 800,000 = 150%.

Time to Value Benchmarks (Guideline):

- Pilot measurable impact: 6–10 weeks

- Portfolio of 5–10 live models: 6–12 months

- Organizational scaling (governed model factory): 12–24 months

Tip: Track Model Impact per Dollar—aggregate incremental profit or cost savings divided by combined ML spend. This gives leadership a simple trend metric.

Future Trends to Watch

- Unified Data + AI Platforms: Warehouse + lake + ML in one fabric reduces data movement friction (lakehouse architectures).

- Generative Assistants for Data Scientists: AI co‑pilots suggesting feature transformations, writing baseline code, generating documentation.

- Automated Governance: Continuous lineage capture and policy enforcement “baked in,” not bolted on.

- Edge & Real‑Time Inference: Demand for low‑latency predictions at the edge (IoT devices, retail POS).

- Responsible AI as Default: Bias checks, explainability visualizations, and model cards auto‑generated for every deployment.

- Business Application Embedding: Predictions surfaced directly inside CRM/ERP/UIs rather than separate dashboards—driving adoption.

Stay flexible so you can absorb these shifts without ripping out your foundation.

FAQs

1. How is a predictive analytics platform different from a BI tool?

BI tools mostly summarize what happened (descriptive). Predictive platforms estimate what will happen and enable interventions (next best offer, reorder timing). You can still visualize results inside BI later.

2. Do small or mid‑size enterprises need an “enterprise” platform?

If you only have 1–2 focused models, a lean open source stack may suffice. Once you need governance, multiple teams, and regulated oversight, platform value outweighs licensing cost.

3. Is AutoML enough—I can just click “run,” right?

AutoML gives strong baselines fast, but you still need data understanding, feature rigor, validation, fairness checks, and proper deployment monitoring. Think of it as a productivity booster, not a strategy.

4. How do I ensure explainability for audits?

Pick a platform with built‑in feature importance, SHAP values, and model documentation exports. Standardize a “model factsheet” template (purpose, data sources, metrics, bias tests) and store it with each version.

5. What skills does my team need before adopting a platform?

Data engineering (pipelines, quality), data science (statistics, ML algorithms), domain expertise, and MLOps (deployment, monitoring). You don’t need ten seniors on day one—start with a balanced core plus training.

6. How often should models be retrained?

Depends on data drift and business volatility. Some demand forecasts retrain weekly; stable credit risk models might refresh quarterly. Set monitoring thresholds (e.g., performance drops 5–10% vs. baseline) to trigger retrain.

7. Can I use multiple platforms together?

Yes, but manage complexity: centralize governance and catalogs to avoid duplicated features and inconsistent metrics. Often one “primary” platform plus a specialized tool (e.g., for deep learning) is plenty.

8. How do I avoid vendor lock‑in?

Use portable artifacts: containerized inference images, exportable model formats (ONNX, PMML where relevant), store code and feature definitions in Git, and abstract data access layers. Multi‑cloud may still carry cost overhead—justify it.

Conclusion

Choosing a predictive analytics platform isn’t about picking the flashiest feature list. It’s aligning your business goals, data maturity, and governance needs with the strengths of each option. Start with clear value cases, audit your data, run a disciplined pilot on 1–2 shortlisted platforms, and build repeatable MLOps practices early. When you focus on measurable outcomes (churn down, forecast accuracy up, fraud loss reduced), the platform conversation shifts from “cost center” to “strategic multiplier.”

So—pick your top use case, select a balanced candidate, spin up that pilot, and let results drive your path. If you want, I can generate a scoring matrix or draft comparison deck next. Just say the word.