Docker is the game-changing application that helps developers build, deploy, and run applications in a completely new way. Simply stated, a container is like a packed version of everything an application needs to run, including libraries, dependencies, and system tools, then allowing it to run uniformly across varying environments and finally combating the age-old “works on my machine” problem.

Prior to Docker, inconsistencies in the development, test, and production environments were a crucial issue for developers. Slight variations in the operating systems, configurations, or dependencies in the application caused unexpected behavior. Docker ensures that all those issues are eradicated and give a standard environment that allows developers to be freer and more agile in work with less effort during the development and testing phase and reduces efforts to deploy applications.

Docker is the cornerstone tool of modern development practices, like DevOps and microservices. It accelerates the pipe for delivering software, foster teamwork across organizations, and supports a cloudnative application. Whether it’s small personal projects or huge enterprise applications, Docker is something that makes things easier and efficient for anyone.

Understanding the Basics of Docker

What’s Containerization?

Containerization would mean that an application and all the required dependencies would be bundled together in a container. Containers represent light, portable, and environmentally independent units wherein consistent results can be expected from one end to the other-from a developer’s laptop to a test server, to a production cloud. The concept is very old, but Docker made it popular and made it accessible to a wider group of developers by simplifying it.

Containers are sometimes viewed as virtual machines, but the two technologies are quite far apart. Although virtual machines virtualize an entire operating system, containers share a similar host system’s kernel-this, in fact, makes them much more lighter and faster to start, stop, and manage virtual machines, which will demand more resources and even time to boot.

By using containers, developers can encapsulate an application and the environment in which it is running so that the application runs uniformly, irrespective of where it is being deployed. In these ways, there are fewer compatibility issues, faster cycles of development, and greater deployment flexibility.

Traditional virtual machines vs. Docker

At first sight, Docker containers and virtual machines look pretty much alike: each is supposed to provide some isolated environment for an application. But differences in architecture mark a clear dividing line between the two. Virtual machines are emulated through hypervisors. Each one of these uses its own set of resources (CPU, memory, disk, etc.), thus adding gigantic overhead in the system – every VM will run its own OS instance.

In contrast, Docker uses containers that run the same kernel as the host OS. Containers do not need a full instance of an OS in order to be executed; therefore, they end up being much more efficient in terms of speed and resource usage. Hundreds of containers can run on one host machine with hardly any overhead, while the same number of VMs would require much more computer power.

How Docker Works: A Technical Overview

Docker Architecture

Docker has a simple architecture consisting of three core components, namely, the Docker client, Docker daemon, and Docker registry.

- Docker Client: This is the interface with which users interact. The client accepts commands such as docker run or docker build and communicates them to the Docker daemon.

- Docker Daemon: It’s a cargo plane. The daemon listens to client commands. And the daemon manages containers, images, networks, and volumes on host.

- Docker Registry: In registries are stored Docker images-these are kinda like blueprints for containers. Docker has an official public registry, but people can set up their own private ones. All the Docker daemon does is pull the images from the registry to make containers.

These pieces fit together well to create, deploy, and run applications inside containers. Docker architecture makes it easy to manage containers on a single host or across a cluster of machines with orchestration tools like Docker Swarm or Kubernetes.

DOCKER KEY TERMINOLOGY

Learn and master Docker’s jargon for success:

- Docker Image: It is a read-only template used for creating containers. It contains everything needed to run an application, including the code, environment, libraries, and dependencies.

- Docker Container : It is the runtime instance of an image. Refers to the isolated environment in which an application runs. Containers are highly lightweight, speed, and can be easily stopped or restarted and even destroyed.

- Dockerfile: A Dockerfile is the actual script, consisting of a series of instructions on how to build a Docker image. Developers employ Dockerfiles to explicitly state what should be included in an image: the operating system, the libraries, or even application code.

- Volumes: Docker uses volumes to persist data. Since containers are transitory, any data not persisted in a volume is lost when that container is removed. Volumes allow data persistence and sharing between multiple containers.

Docker Networking

Docker handles the network to support inter-container and inter-system communication. Docker provides various options for networking:

- Bridge : The default network mode. Containers can communicate with other containers in the same bridge network .

- Host : In this mode, the container will use the host machine’s network, and thus give it direct access to the external network.

- Overlay: Used for clustering with Docker Swarm, which means that communication between the different containers on the hosts can now take place.

Docker networking simplifies a secure isolation of parts of an application and provides easy configuring.

Building Your First Docker Application

Now that you understand the basics, let’s get hands-on by building a simple Docker application. In this example, we’ll containerize a basic Python web application.

Step 1: Writing a Dockerfile

Create a file named “Dockerfile” in the project directory and add the following content:

# Use an official Python runtime as a parent image FROM python:3.8-slim # Set the working directory WORKDIR /app # Copy the current directory contents into the container COPY . /app # Install the dependencies RUN pip install -r requirements.txt # Define the command to run the application CMD ["python", "app.py"]

This Dockerfile does several things:

- Pulls a lightweight Python image from Docker Hub.

- Sets the working directory to “/app“.

- Copies the contents of the project directory to the container.

- Installs the necessary dependencies.

- Defines the command to start the Python application.

Step 2: Building the Docker Image

To build the image, open a terminal and navigate to the project directory. Run the following command:

docker build -t my-python-app .

Docker will execute the instructions in the Dockerfile to create an image called my-python-app.

Step 3: Running the Container

Once the image is built, you can run the container using the following command:

docker run -p 5000:5000 my-python-app

This starts the container and binds it to port 5000 on your machine. Now, you can open a browser and visit http://localhost:5000 to see your Python application running inside a Docker container.

This is just a simple example, but the same principles apply to more complex applications. With Docker, you can easily package and run any application in a portable, isolated environment.

Dockerfile Best Practices

While a Dockerfile appears pretty straightforward, there are a few best practices you have to adhere to in order to make your image efficient, secure, and easy to maintain.

1. Use Small, Base Images

Always use a minimal base image such as alpine or the slim versions of the official images. Smaller base images make your final Docker image less in size, thus building, pulling, or pushing it faster across your environments.

2. Optimize Layering

Each Dockerfile command generates a new layer in the resulting image. In as many places as possible, combine multiple commands into a single layer to produce fewer layers overall and, hence, a smaller final image. For example:

RUN apt-get update && apt-get install -y \ python3 \ python3-pip \ && rm -rf /var/lib/apt/lists/*

3. Use Caching

Docker caches the result of each layer when building. If the Dockerfile hasn’t changed, then Docker will reuse those layers for a huge speed up on future builds. You maximize Docker’s use of caching by organizing your Dockerfile with the least frequently changed parts near the top.

4. Avoid Hardcoding Secrets

Never use any sensitive credentials like passwords or API keys directly within your Dockerfile. Always use environment variables or Docker Secrets for all your sensitive data.

By following these guidelines, you optimize your Dockerfiles for security and performance to make your long-term container maintenance simple.

Managing Containers: Essential Docker Commands

To effectively work with Docker, one has to know some very fundamental commands through which one can operate on containers. Here are a few of the most important Docker commands and their usage:

docker run

The “docker run” command is the primary method for launching a container from an image. For example:

docker run -d -p 8080:80 --name webserver nginx

This command runs an NGINX container in detached mode (“-d“), maps port 8080 on the host to port 80 in the container, and gives the container the name “webserver.”

docker ps

This command lists all the running containers. By default, it shows the container ID, image name, command, and uptime, among other details:

docker ps

To list all containers, including those that are stopped, you can use the “-a” flag:

docker ps -a

docker stop and docker rm

To stop a running container, use the “docker stop” command, followed by the container name or ID:

docker stop webserver

To remove a stopped container, use “docker rm“:

docker rm webserver

You can also forcefully remove a running container by using the “-f” flag:

docker rm -f webserver

docker logs

The “docker logs” command allows you to view the logs from a running container. This is useful for debugging and monitoring applications:

docker logs webserver

You can also tail logs using the -f flag to see real-time log output:

docker logs -f webserver

docker exec

To run a command inside a running container, use “docker exec“. For example, to start a shell session inside a container:

docker exec -it webserver /bin/bash

The -it flag makes the session interactive, so you can run commands within the container as if you were logged in to a separate machine.

Mastering these commands will make managing Docker containers more efficient and flexible, whether you are developing locally or managing production environments.

Docker Compose: Simplifying Multi-Container Applications

While it is quite easy to run isolated applications in Docker, real world applications always consist of several services that need to work together. This is where Docker Compose steps in. Docker Compose is a tool for defining and running multi-container Docker applications. With a single YAML file, you can configure all your application’s services and start them with a single command.

What is Docker Compose?

Docker Compose allows you to manage multi-container applications using a single configuration file (docker-compose.yml). It eliminates the need to manually run individual containers and link them together, which can become cumbersome in complex applications.

Example Docker Compose File

Here’s a simple example of a Docker Compose file for a web application that uses a web server (NGINX) and a database (PostgreSQL):

version: '3' services: web: image: nginx:alpine ports: - "8080:80" db: image: postgres:latest environment: POSTGRES_USER: admin POSTGRES_PASSWORD: password POSTGRES_DB: mydb

In this file, we define two services: web and db. The web service runs an NGINX container, and the db service runs a PostgreSQL container. The two services are automatically linked within a shared network.

Running Docker Compose

Once the docker-compose.yml file is created, you can start the entire application using a single command:

docker-compose up

This command pulls the necessary images (if they’re not already on your machine) and starts the containers. To stop the services, use:

docker-compose down

Docker Compose significantly simplifies the management of multi-container applications.

Scaling Docker with Orchestration Tools

Container Orchestration

Whereas different applications scale differently, the most common aspect is that an application requires more hosts and more containers to run. This is where container orchestration comes in. Native orchestration is achieved using Docker Swarm, which is directly provided by Docker. For the most part, however, Kubernetes presents itself as the leading orchestrator.

Container Orchestration Definition

Container orchestration helps automate deploying, scaling, and managing containerized applications. Container orchestration itself allows one to take care of issues like load balancing, scheduling, fault tolerance, and service discovery of a cluster of machines. Orchestration ensures your application is highly available and scales up with the growth in demand.

Docker Swarm Vs Kubernetes

In fact, Docker Swarm is Docker’s built-in orchestration tool. It is easy to set up and, above all, integrates very well with Docker. With Swarm, you can easily create a cluster of Docker hosts and deploy services across them using simple commands. It offers basic features like load balancing, scaling, and service discovery.

Kubernetes is more feature-complete orchestration platform; it was developed by Google and has evolved to become the industry standard for running containers in a production environment. More advanced features include rolling updates, self-healing, secret management, and horizontal scaling. Compared to Docker Swarm, the learning curve for Kubernetes is steeper, but they are great options for flexibility and control in complex, large deployments.

Scaling Containers with Docker Swarm

To scale containers with Docker Swarm, you can use a single command to increase or decrease the number of running instances:

docker service scale web=5

This command scales the web service to 5 instances across the swarm cluster, dispersing the load and ensuring a high availability.

Robustness and ecosystem-wise, Kubernetes seems the best choice for enterprises and large scale applications; however, Docker Swarm has much simpler alternative if projects are small or teams just starting with container orchestration.

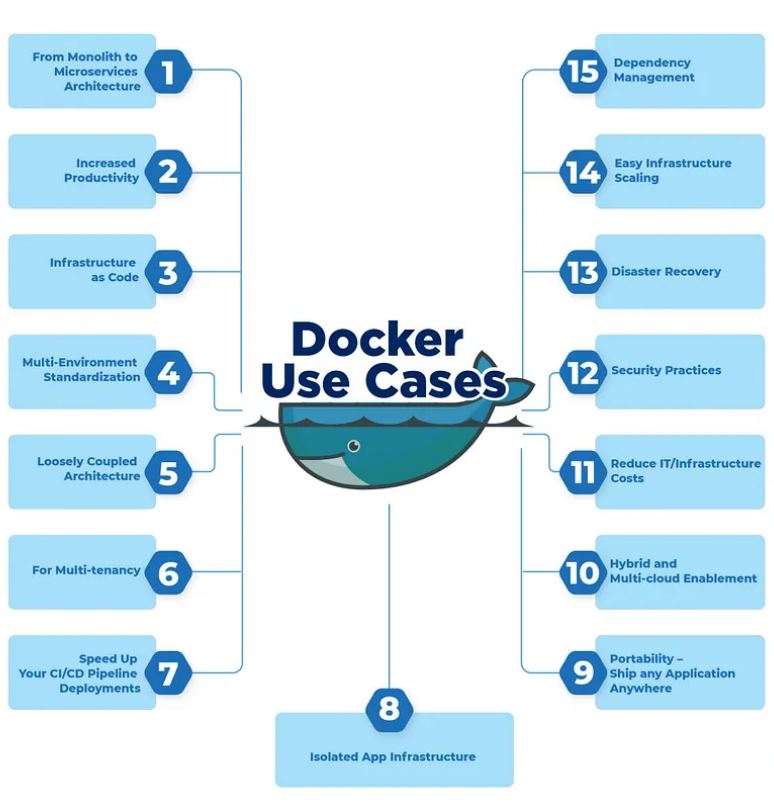

Common Docker Use Cases and Real-World Applications

With Docker, one may put their app together with several other third-party apps in order to create an advanced system. Having wide scope in use cases, Docker might just fit into any situation that industries can provide. A few of the most typical situations where Docker really outplays other forms of deployment are given below.

1. Microservices Architecture

In a microservices architecture, applications are broken down into smaller, more manageable, independently deployable services. Each service would own a certain slice of functionality-for example, user authentication or the product catalog-and would interact with other services through APIs. For this reason, Docker is a natural fit for microservices because it allows each service to run inside its very own, isolated container, which makes easy to deploy, scale, and manage services independently.

2. CI/CD Pipelines

One of the major reasons Docker is well-integrated with CI/CD tools such as Jenkins, GitLab CI, and Travis CI is that it enables faster builds, tests, and deployments. Docker containers ensure consistency by making sure that code work has the same behavior in the development, test environments, and actual production. You can automate build process. Run tests in isolation. Update applications without fretting about the details of the environment.

3. Cloud Deployments

Docker containers are very portable so there is a strong suitability toward deploying applications in the cloud. You can use AWS, Google Cloud, or even Microsoft Azure, and in all cases, Docker enables you to package and ship your application together with all of its dependencies. Cloud providers even offer specialized services such as Amazon ECS, Google Kubernetes Engine, and Azure Kubernetes Service in order to manage Docker-based applications at scale.

4. Development and Testing

Docker simplifies the setting and management of development environments for developers. Developers do not have to install the software personally in their environment, but a developer can simply spin up containers for the dependency of an application. Thus, developers save time and ensure consistency in all team members’ environments. Also, it is easy to test an application using different environments, mimicking various production scenarios devoid of the developer’s machine.

Docker has penetrated nearly all fields of contemporary software engineering-from small startup companies to large enterprises. Its flexibility makes it a crucial tool within the toolkit for every software developer, system administrator, or DevOps engineer.

Docker Security Best Practices

While many security features are provided in a box by Docker, it is very important to take care of some basic practices to prevent potential threats to your containers. Here are some tips for securing your Docker environment.

1. Use Official and Trusted Images

Download Pictures from Docker Hub and Ensure They Originated from Trusted Places If the pictures are pulling in Docker Hub, then make sure they originate from trusted places. There are Docker images which are maintained by the Docker group, and you get such official pictures through regular security vulnerability updates. Any other picture is unofficial, and it could be risky in terms of security. Such pictures could be malware-ridden or possess malicious codes.

2. Regularly Scan Images for Vulnerabilities

Docker provides tools for scanning images for known vulnerabilities. For example, the docker scan command checks your images against a database of security vulnerabilities:

docker scan my-image

You also can use other services like Docker Hub or third-party tools like Aqua Security and Clair can automatically scan your images and alert you to potential issues.

3. Limit Container Privileges

By default, containers run as the root user, which can pose a security risk if the container is compromised. You should configure your containers to run with non-root users by adding the following line to your Dockerfile:

USER nonroot

This limits the container’s access to the host system and reduces the potential impact of a security breach.

4. Isolate Containers with User Namespaces

User namespaces allow you to map the root user in a container to a non-root user on the host system. This adds an extra layer of isolation and prevents containers from gaining elevated privileges on the host system.

{

"userns-remap": "default"

}

Configuring Docker to remap user namespaces enhances container security, especially in environments where containers are exposed to the public internet.

Docker in Production: Tips for Efficient Use

The case of running Docker in a production environment is somewhat unique and differs from the ease of development and test installation. Although Docker makes it easy to develop and test applications locally, scale deployments of containers reliably and efficiently require planning as well as best practices. Some of the key tips for running Docker containers in production are as follows.

1. Use Docker Volumes for Persistent Data

Docker containers are, by default, ephemeral. Data stored within the container is lost when you remove or restart the container. This is okay in some stateless applications but most real-world applications require persistence of data. Docker volumes let you persist data outside of the container so it will survive even if the container is destroyed or recycled.

docker run -d -v /my/host/data:/data my-container

In the example above, /my/host/data on the host machine is mapped to /data in the container, allowing data to be persisted across container lifecycles. Docker volumes can also be shared between multiple containers, making them ideal for stateful applications like databases.

2. Managing Logs

In production, it’s essential to have proper logging in place to monitor the performance and behavior of your containers. By default, Docker logs are captured from the container’s standard output and standard error streams. However, for better log management, you should forward logs to a centralized logging service like ELK (Elasticsearch, Logstash, Kibana), Fluentd, or Graylog.

Docker allows you to configure logging drivers for containers using the –log-driver option:

docker run --log-driver syslog my-container

This example forwards container logs to a syslog server. By centralizing logs, you can monitor application health, debug issues, and track usage patterns effectively.

3. Monitoring and Performance Optimization

Monitoring container performance is critical in a production environment. Docker does not come with built-in monitoring tools, but it integrates with popular solutions like Prometheus, Grafana, and Datadog. These tools allow you to track metrics like CPU usage, memory consumption, and network traffic, helping you identify bottlenecks and optimize container performance.

Docker provides the docker stats command to get a quick overview of container resource usage:

docker stats my-container

For more advanced monitoring, set up a Prometheus and Grafana stack to collect and visualize container metrics over time.

4. Performance Tuning Tips

- Reduce Image Size: Smaller Docker images load faster and consume less disk space. Use slim or alpine base images to minimize image size.

- Limit Resources: Use Docker’s resource limiting flags to prevent containers from consuming excessive CPU or memory. For example, you can restrict a container to 1 CPU core and 512 MB of RAM:

docker run -d --cpus="1" --memory="512m" my-container

- Optimize Network Performance: In high-traffic applications, Docker’s default bridge network might become a bottleneck. Consider using overlay networks or host networking for better performance.

By following these tips, you can ensure that your Docker containers run efficiently and reliably in production environments.

Docker and Cloud Computing

Very much suited for cloud-native applications, Docker allows application run-only-once capability, which can be deployed consistently across any cloud provider, whether AWS, GCP, or Azure. Major cloud platforms offer services optimized for Docker, meaning it’s pretty easy to manage containers in the cloud at scale.

Docker on AWS

Other services offered by AWS with Docker in mind include Amazon Elastic Container Service (ECS), Amazon Elastic Kubernetes Service (EKS), and AWS Fargate.

- Amazon ECS: ECS is a fully managed container orchestration service which allows running of Docker containers on the AWS infrastructure. It also supports other AWS services like ELB, AWS CloudWatch, and AWS IAM for security and monitoring purposes.

- AWS Fargate: Fargate is the entirely serverless container execution engine that supports both ECS and EKS. With Fargate, you do not need to provision or manage any infrastructure; instead, you define your containers, and Fargate will do the rest.

- Amazon EKS : EKS is a managed Kubernetes cluster service offered by AWS. Users who are already familiar with Kubernetes use it to utilize AWS’s scalability and reliability.

Docker on Google Cloud

Google Cloud has offered one of the most popular platforms in production environments to run Docker containers, which is its offerings for Kubernetes. In a nutshell, Google’s offering called GKE (Google Kubernetes Engine) provides automatic updates, scaling, and monitoring out of the box. Moreover, Google also offers a fully managed service, Cloud Run, to run Docker containers directly, without managing servers.

Docker on Microsoft Azure

Besides GKE and EKS, Microsoft Azure also provides a managed container service known as Azure Kubernetes Service (AKS). It also provides the ability to run containers directly in the cloud, with no virtual machine needing to be provisioned, through Azure Container Instances (ACI).

Hybrid Cloud and Multicloud Deployments

So hybrid cloud or multicloud applications are built easily, meaning containers can run on multiple providers’ clouds or even across both on-premise and the cloud environments. Tools like Docker Enterprise and Kubernetes make multicloud deployments easier by providing a unified, unifying platform that manages containers in various environments.

Docker is the epitome of agility and portability in modern cloud environments. We may be dealing with simple microservices or complex distributed systems, but with Docker and cloud platforms, we’re designed to collaborate with you to tackle scalability, reliability, and ease of use.

The Future of Docker

Docker has constantly evolved ever since it started. Its future seems to be getting brighter and brighter as the days go by. Being the trend of the world of technology, Docker has learned to adapt to new trends and demands that may rise in the future, thus living as a primary actor in the world of containerization and cloud-native applications.

Serverless Computing

One new trend in cloud computing is serverless architecture, wherein developers do not have to be concerned with dealing with servers or infrastructure. A good chunk of the space Docker initially occupied came from containerizing long-running applications. Today, Docker finds its place in the serverless world too.

Tools that allow running containers in a serverless fashion or abstract away underlying infrastructure using AWS Fargate, Google Cloud Run, or Azure Container Instances, for instance. As Docker faces increasing adoption of serverless computing, it will need to continue to mature to fit this new paradigm.

Edge Computing

The other area where Docker is really making inroads is on edge computing. Edge computing refers to running applications closer to the end user-mostly on an IoT sensor, industrial machine, or some local server. Its resource-hungry footprint makes it suitable for running containerized applications on edge devices that are resource-constrained.

Docker will, without a doubt, be more central for managing distributed, containerized applications as edge computing continues to grow across a lot of different locations and devices.

Kubernetes and Container Orchestration

The latest version of Docker containerization has now paved the way for Kubernetes to take the orchestration standard. Integration of Docker is becoming even better with Kubernetes, and we expect that in the future, Docker will provide tighter integration with Kubernetes and orchestration tools.

The company is also working on making its Docker Desktop better. Through this, developers are provided with additional capabilities in local development, testing, and delivering containers straightforwardly to Kubernetes environments.

Security and Compliance

As more containerized applications make it to production, security and compliance are always on top of the radar. From Docker’s work, enhanced security features have been added with better vulnerability scanning, a more secure image signing system, and many other improvements related to runtime protection.

Docker will continue its efforts to add more security tooling so that deployment of containerized applications can be eased to occur in a safe environment in both the cloud and on-premises.

Thus, the future of Docker will follow in the footsteps of cloud-native technologies, serverless computing, and edge computing. The platform has a flexibility that will continue to make it central in the software development ecosystem for years to come.

Docker in a Nutshell

The Docker platform has transformed the way developers build, ship, and run applications. A Docker container provides consistent environments everywhere, eliminating the headaches of environment-specific bugs and incompatibilities. This is a key technology in modern software development. Its impact has even been felt on such interlinked, heterogeneous systems like microservices and cloud-native applications.

In the overall scope, I covered topics from architecture to the need commands in deploying containers within a production environment. For all devs, system administrators, and DevOps engineers out there, mastering Docker will significantly enhance your ability to build and manage scalable, reliable applications.

As Docker continues on its journey it will embed all the trends like serverless computing, edge computing, and Kubernetes orchestration; its importance will only increase. Following the best practices presented in this guide and truly unleashing Docker’s full potential can actually streamline your development workflows and make sure that your applications will work flawlessly and securely in any environment.

So, what are you waiting for? Dive into Docker, containerize your applications, and join the millions of developers transforming the software development landscape through it!

FAQs About Docker

1. What is the difference between Docker and a Virtual Machine (VM)?

Containers and VMs differ in how they operate; whereas VMs will run a full OS, which requires more resources and takes longer to start, Docker containers are lighter and faster in operation since they share the host OS kernel.

2. Is Docker free to use?

They actually offer a free variant called Docker Community Edition (CE), which is more than sufficient for individuals and small teams. Docker Enterprise Edition (EE) is targeting big corporations that require extra features in security, management, and support.

3. Can Docker replace virtual machines completely?

Not really. Docker is suited for use cases that require isolated environments with minimal overheads, such as with microservices and stateless applications. Conversely, Virtual Machines are still needed to run many different operating systems or when strict isolation is required, as VMs can provide full OS-level separation.

4. What is Docker Hub, and how do I use it?

Docker Hub is a public cloud-based registry in which developers can store and share Docker images. Official Images are part of Docker and under its control, while community images are the developer’s own. Pull images from Docker Hub to run in your own projects or push your images to Docker Hub for others to access.

To pull an image from Docker Hub, use the following command:

docker pull <image-name>

5. Can I run Docker on Windows?

Yes, Docker can natively run on the Windows OS with Docker Desktop. Docker Desktop will allow you to run Linux-based containers on top of Windows using a lightweight virtual machine. It also supports running native Windows containers within the Docker container platform that have been designed to run natively in a Windows Server-based environment.

6. How secure is Docker?

Key security features from Docker include isolated containers, image signing, and vulnerability scanning. Yet, no technology is completely immune to security threats. In line with Docker security best practices, use trusted images, do not assign too much privilege to containers, and scan for vulnerabilities regularly to ensure that your containers remain secure.

7. What is a Dockerfile, and why do I need it?

A Dockerfile is actually a script which comprises of a list of instructions used to construct a Docker image. It defines the base image for an application, its dependencies, environment variables, among other configurations required for making a Docker container. Dockerfiles are vital because they enable developers to automate the process of building images in a consistent, repeatable way.

8. What happens to my data when a Docker container is destroyed?

By default, any data stored inside a Docker container is lost when the container is destroyed. To persist data beyond the life of a container, you can use Docker volumes or bind mounts to store data on the host machine. That way, your data remains if a container is restarted or removed.

9. What is Docker Compose, and when should I use it?

Docker Compose is a tool that lets you define and run multi-container applications, managing multiple containers with a simple YAML file called docker-compose.yml. Use it if your application consists of several services, for instance, a web server, database, and cache, which should be running together in different containers.